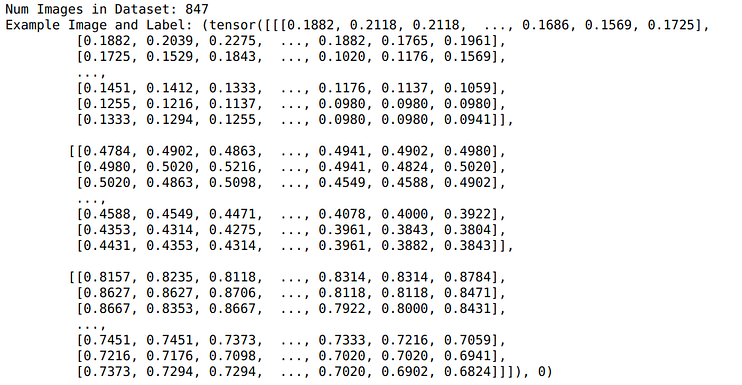

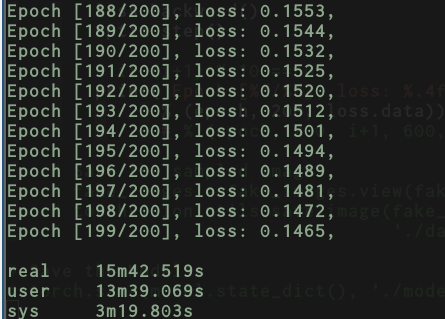

41 pytorch dataloader without labels

docs.nvidia.com › deeplearning › daliNVIDIA DALI Documentation — NVIDIA DALI 1.19.0 documentation Q: How easy is it to integrate DALI with existing pipelines such as PyTorch Lightning? Q: Does DALI typically result in slower throughput using a single GPU versus using multiple PyTorch worker threads in a data loader? Q: Will labels, for example, bounding boxes, be adapted automatically when transforming the image data? github.com › pytorch › pytorchGet a single batch from DataLoader without iterating #1917 Is it possible to get a single batch from a DataLoader? Currently, I setup a for loop and return a batch manually. If there isn't a way to do this with the DataLoader currently, I would be happ...

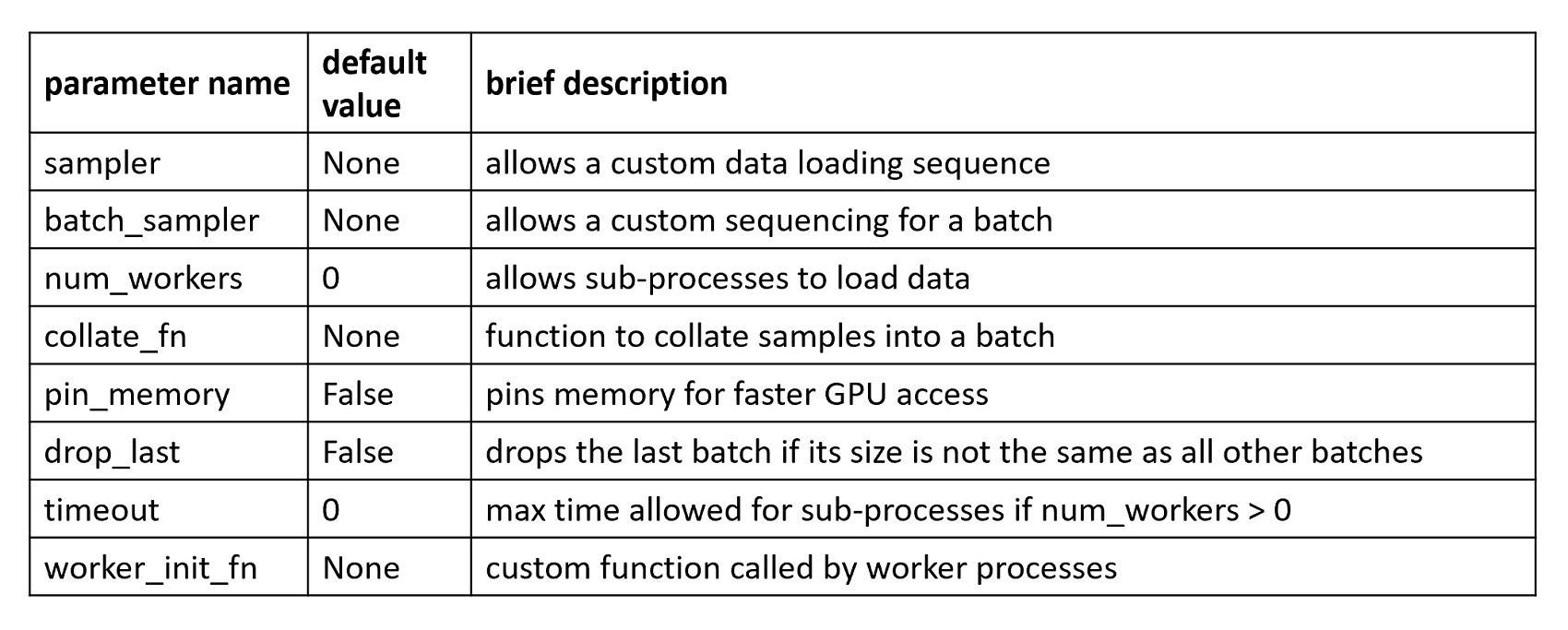

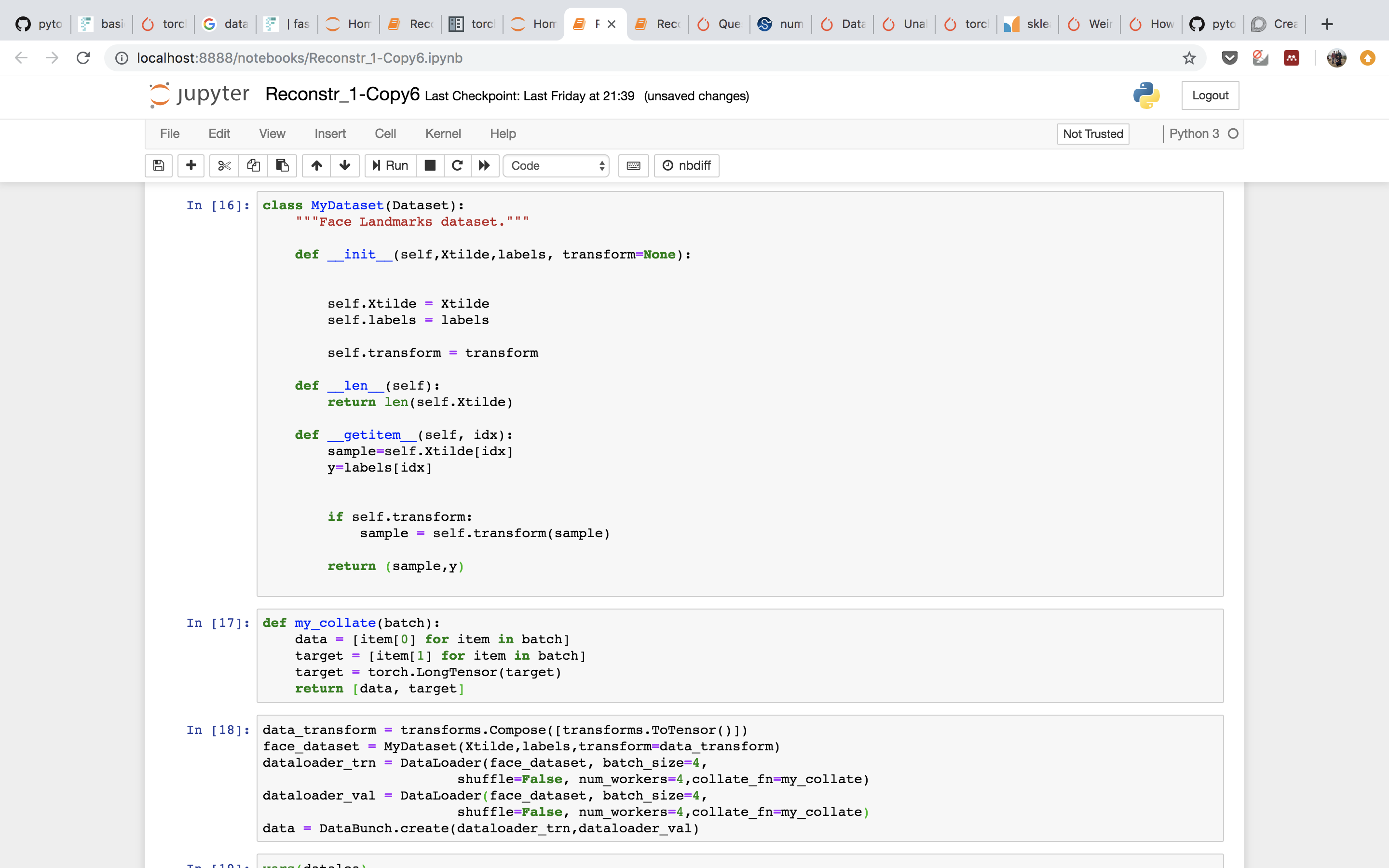

stackoverflow.com › questions › 65279115python - How to use 'collate_fn' with dataloaders? - Stack ... Dec 13, 2020 · DataLoader(toy_dataset, collate_fn=collate_fn, batch_size=5) With this collate_fn function, you always gonna have a tensor where all your examples have the same size. So, when you feed your forward() function with this data, you need to use the length to get the original data back, to not use those meaningless zeros in your computation.

Pytorch dataloader without labels

huggingface.co › course › chapter3A full training - Hugging Face Course Finally, the learning rate scheduler used by default is just a linear decay from the maximum value (5e-5) to 0. To properly define it, we need to know the number of training steps we will take, which is the number of epochs we want to run multiplied by the number of training batches (which is the length of our training dataloader). towardsdatascience.com › how-to-use-datasets-andHow to use Datasets and DataLoader in PyTorch for custom text ... May 14, 2021 · First, we create two lists called ‘text’ and ‘labels’ as an example. text_labels_df = pd.DataFrame({‘Text’: text, ‘Labels’: labels}): This is not essential, but Pandas is a useful tool for data management and pre-processing and will probably be used in your PyTorch pipeline. In this section the lists ‘text’ and ‘labels ... stackoverflow.com › questions › 44617871How to convert a list of strings into a tensor in pytorch? The trick is first to find out max length of a word in the list, and then at the second loop populate the tensor with zeros padding. Note that utf8 strings take two bytes per char.

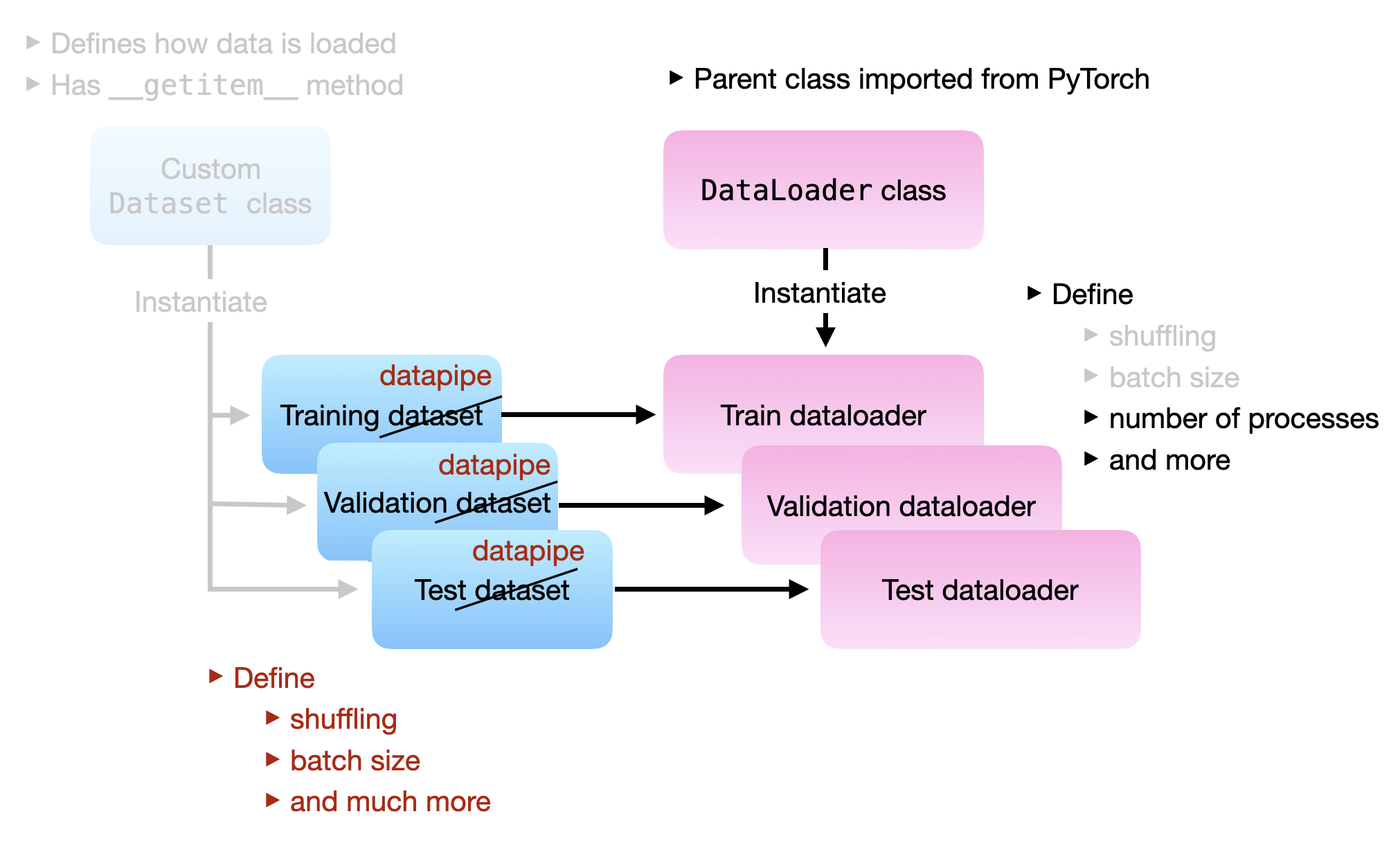

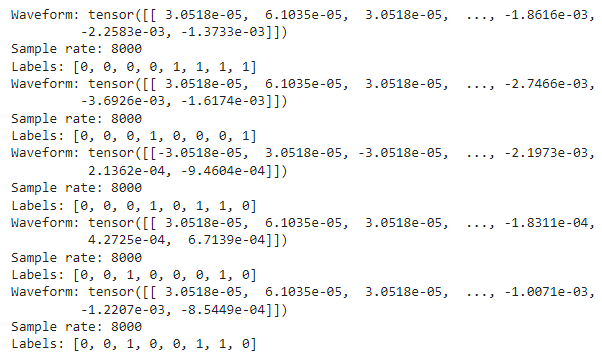

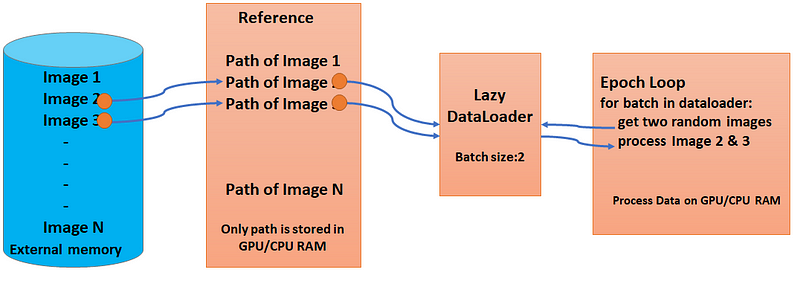

Pytorch dataloader without labels. androidkt.com › load-pandas-dataframe-usingLoad Pandas Dataframe using Dataset and DataLoader in PyTorch. Jan 03, 2022 · Then, the file output is separated into features and labels accordingly. Finally, we convert our dataset into torch tensors. Create DataLoader. To train a deep learning model, we need to create a DataLoader from the dataset. DataLoaders offer multi-worker, multi-processing capabilities without requiring us to right codes for that. stackoverflow.com › questions › 44617871How to convert a list of strings into a tensor in pytorch? The trick is first to find out max length of a word in the list, and then at the second loop populate the tensor with zeros padding. Note that utf8 strings take two bytes per char. towardsdatascience.com › how-to-use-datasets-andHow to use Datasets and DataLoader in PyTorch for custom text ... May 14, 2021 · First, we create two lists called ‘text’ and ‘labels’ as an example. text_labels_df = pd.DataFrame({‘Text’: text, ‘Labels’: labels}): This is not essential, but Pandas is a useful tool for data management and pre-processing and will probably be used in your PyTorch pipeline. In this section the lists ‘text’ and ‘labels ... huggingface.co › course › chapter3A full training - Hugging Face Course Finally, the learning rate scheduler used by default is just a linear decay from the maximum value (5e-5) to 0. To properly define it, we need to know the number of training steps we will take, which is the number of epochs we want to run multiplied by the number of training batches (which is the length of our training dataloader).

![PyTorch [Basics] — Sampling Samplers | by Akshaj Verma ...](https://miro.medium.com/max/1400/1*3Dsdw-L4qVhT1WkyLvtsPg.jpeg)

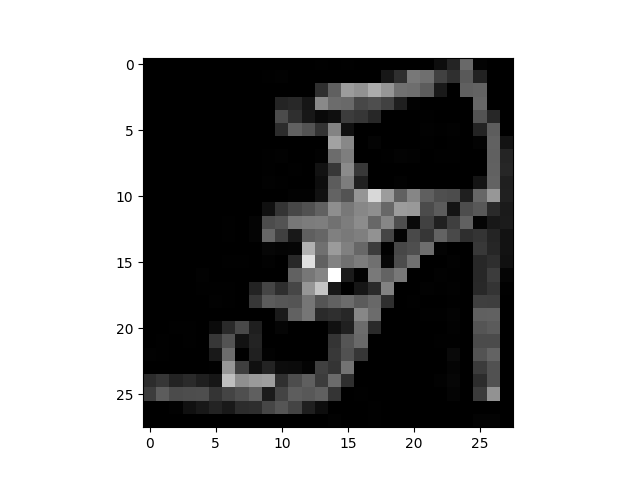

![D] - Introduction to medical image processing with Python: CT ...](https://external-preview.redd.it/vQfUXp_PKhBmtX4y-uGitw6dGnNPkHBnpKALlulVPEs.jpg?width=640&crop=smart&auto=webp&s=768929b65bda2bc12b3c7667e139a1707a0fa215)

Post a Comment for "41 pytorch dataloader without labels"